- Best student presentation award:

- Raphaël Carpintero Perez (Ecole Polytechnique, SAFRAN Tech)

- Best student poster awards

- Louis Allain (Université de Rennes, SAFRAN Tech)

- Guerlain Lambert (Ecole Centrale de Lyon, INRAE)

Le blog du RT UQ

Annonces de faits marquants et d'événements scientifiques à venir dans le domaine de l'expérimentation numérique et du traitement des incertitudes

mercredi 23 avril 2025

RT-UQ PhD Awards 2025

lundi 17 février 2025

RT UQ PhD Day: Program

The program of the PhD day of RT UQ is available from the SAMO conference web site.

Oral presentations: Go to https://samo2025.sciencesconf.org/program and click on each session to see the details.

Poster presentations: See https://samo2025.sciencesconf.org/data/program/RT_UQ_2025_Posters_1.pdf.

mardi 4 février 2025

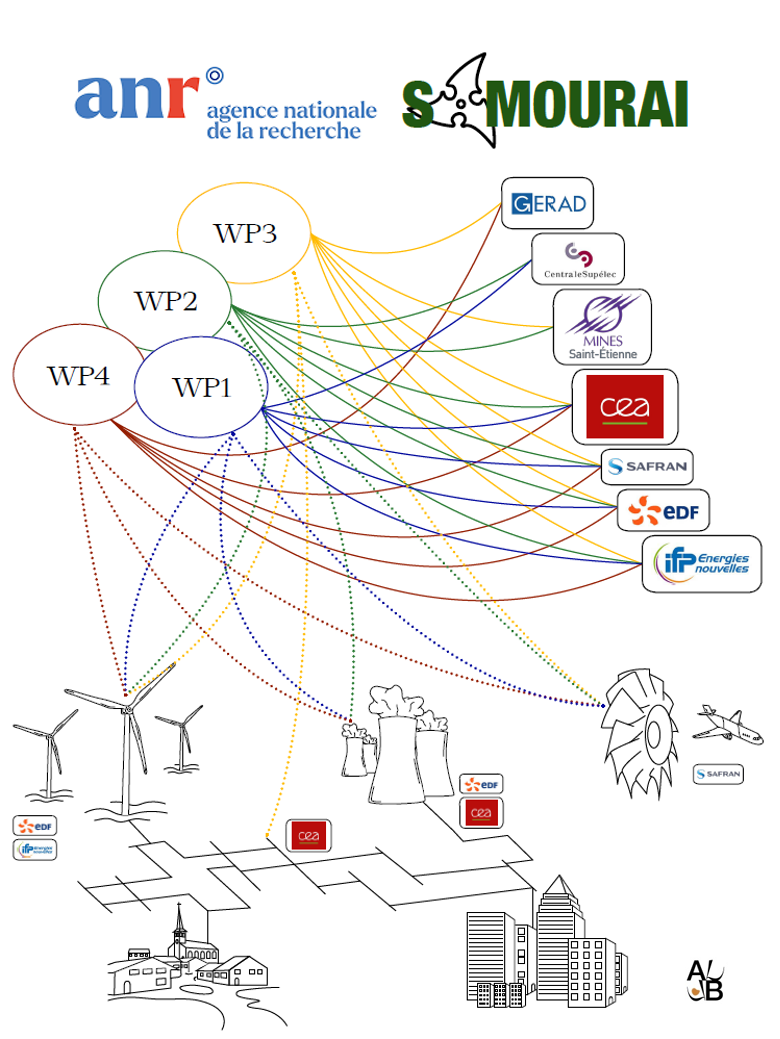

Workshop SAMOURAI: Slides

A big thank you to all the speakers & attendees for making this event a success!

The organizers (Delphine Sinoquet & Morgane Menz)

mercredi 3 juillet 2024

Workshop on "Bayesian optimization & related topics": Slides

The slides for the RT-UQ Workshop on "Bayesian optimization & related topics" (June 20, IHP) are now available.

Many thanks to all the speakers & attendees for making this event a success!

The organizers (Céline H., Delphine S. & Julien B.)

vendredi 3 mai 2024

Workshop on "Bayesian optimization & related topics": Registration deadline

Please register before May 31 if you want to attend the RT-UQ Workshop on "Bayesian optimization & related topics" (June 20, IHP).

We need to know the number of participants in advance, in order to properly size the buffet meal.

jeudi 21 septembre 2023

Workshop on "Physics informed learning"

Dear all,

We are pleased to inform you that the detailed program for the next GdR MASCOT-NUM workshop on "Physics-informed learning" taking place on December 4-5 2023 at Institut de Mathématiques de Toulouse is available, and that registration is open!

If you are interested, please visit the event webpage :

https://indico.math.cnrs.fr/event/9994/

Hoping to see many of you in Toulouse !

The organizers

Olivier Roustant (INSA Toulouse ) & Sébastien Da Veiga (ENSAI Rennes)

mardi 6 juin 2023

Workshop on "Calibration of numerical codes" : slides

The slides for all the presentations are now available on the workhop web page :

https://www.gdr-mascotnum.fr/may23